Music is a predominant way we experience artistry, poetry, and international voices.

The tools we use to interact with music, however, rarely account for the cultural and contextual richness of each song.

Streaming platforms are algorithm-driven file browsers, and little more.

It's great for passive listening, encouraging us to 'set it and forget it.' However, this design strategy is greatly limiting the diversity and depth of our engagement with music.

Spotify tracks how the entire world listens to music. Yet, it does not allow users to find new music from this data. Their algorithm overwhelmingly recommends things that you've already heard.

In song-based radios, genre mixes, and even Spotify-curated playlists, the music that you can 'discover' is becoming, more and more, variations of what you already know.

Not to mention that there's not a single way to see a song's genre, relative mood, relative audio qualities, or popular moments. Nor a way to find other songs through these qualities. Despite the fact that Spotify has all of this data.

While these are systematic issues, they are also design challenges that can be partially addressed by a third-party intervention.

Tooling for Music-turgy

Creating an intelligent listening companion.

Did you know the lyrics on streaming platforms are crowd-sourced? Thousands of hours of unpaid labor happen on the Musixmatch platform, where users transcribe, sync, and translate lyrics for free. Then, Musixmatch put this data behind a paywall and expensive API.

While I don't agree with this business model, it points to the enormous potential of existing community-driven data. There is an expansive ecosystem of consumer sites that use this data, but none that integrate it into the listening experience.

(Spotify has tried multiple times to integrate annotations, but without the wealth of community-driven data.)

Hype Machine

Journalism aggregation, music-blog-based charts.

Rate Your Music

Power user reviews of music, community tags and metadata.

Genius

Lyrics, annotations, and secondary artist tracking.

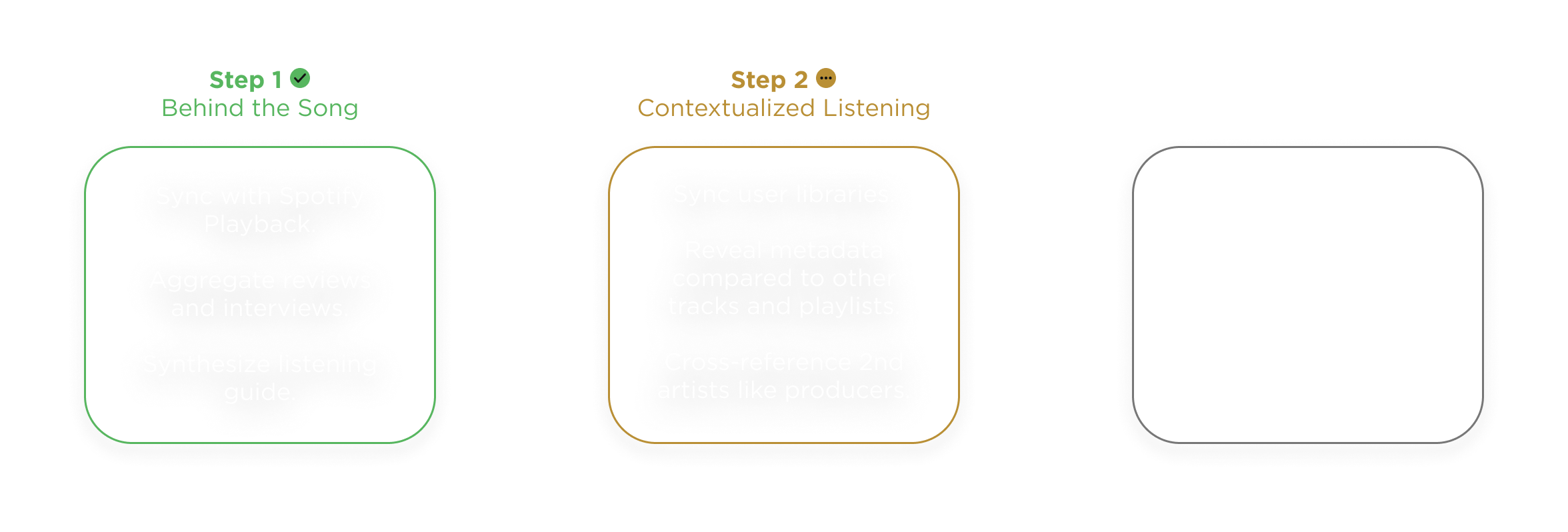

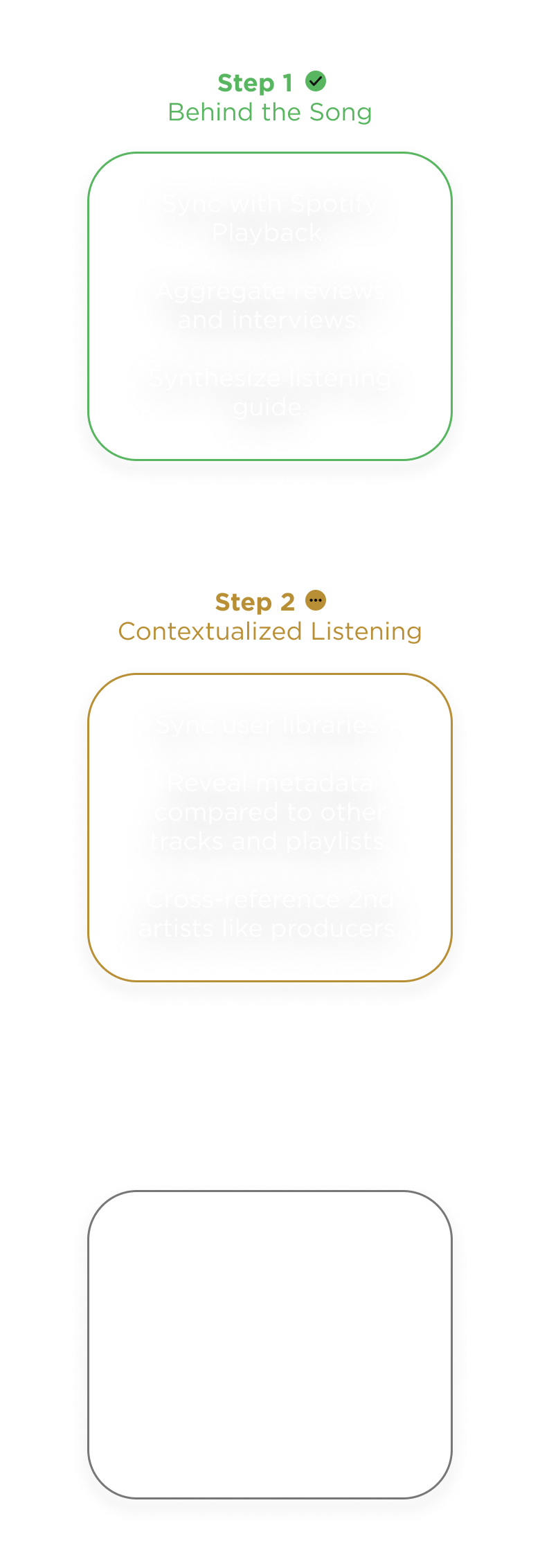

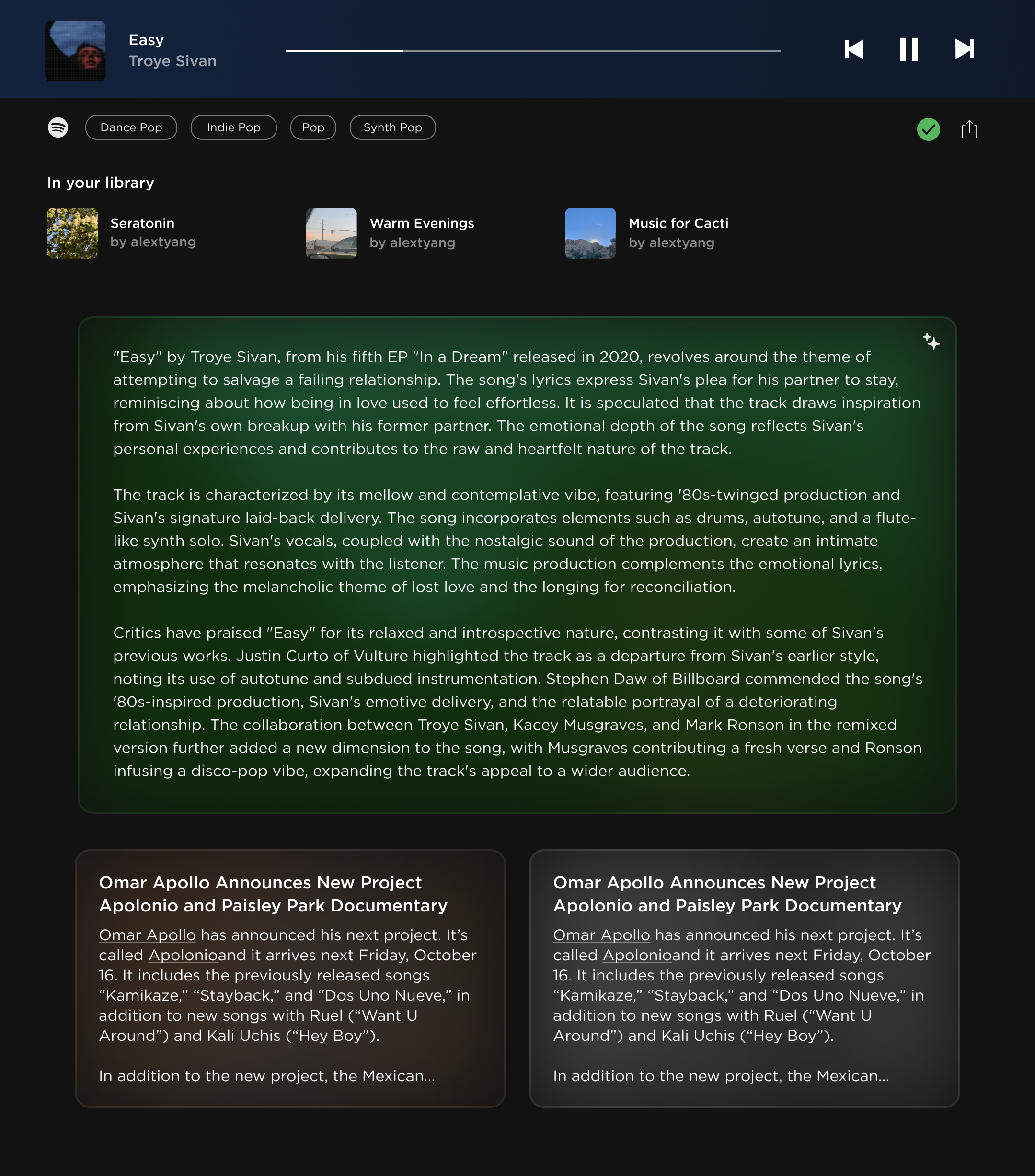

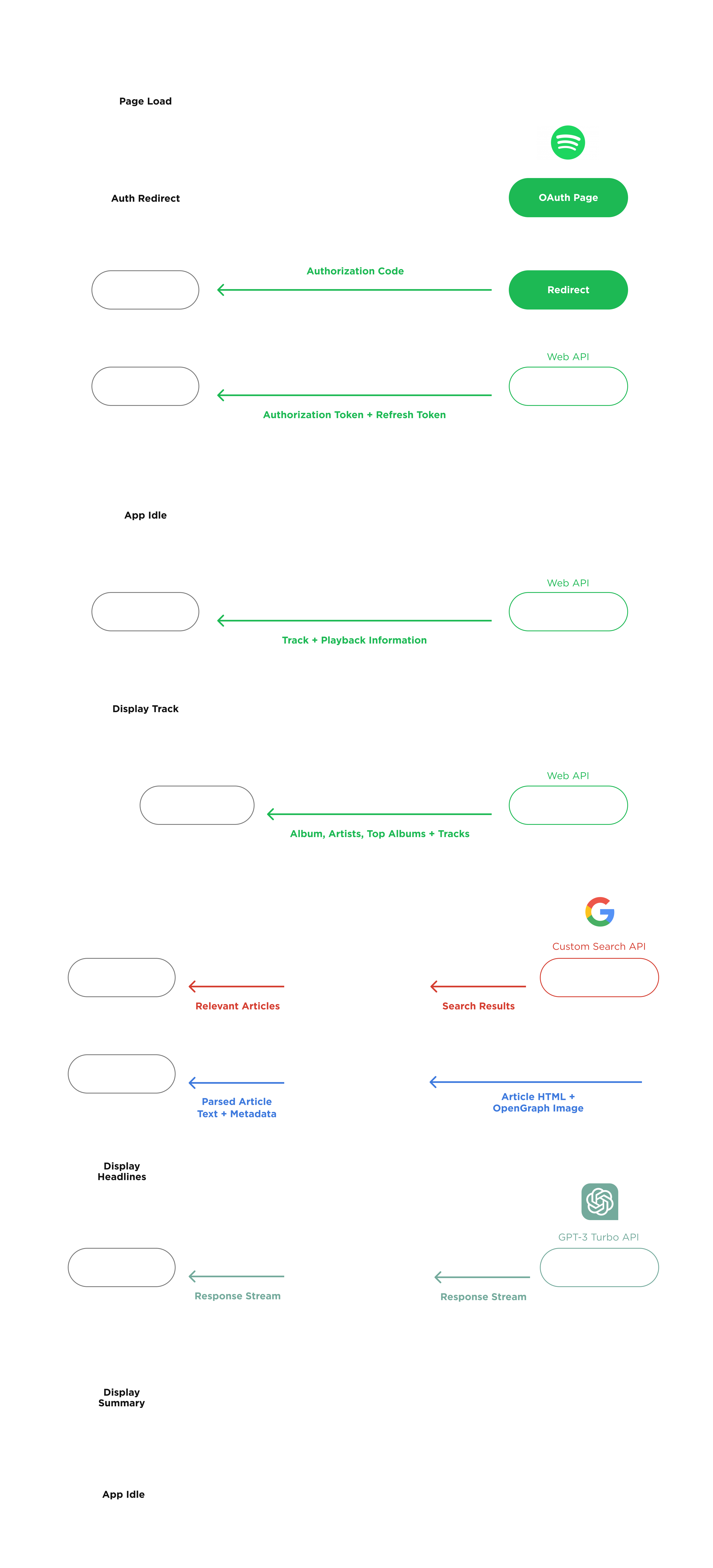

Inspired to expand and deeply integrate what existing datasets can provide, I drafted a technical roadmap for the enhanced-listening experience.

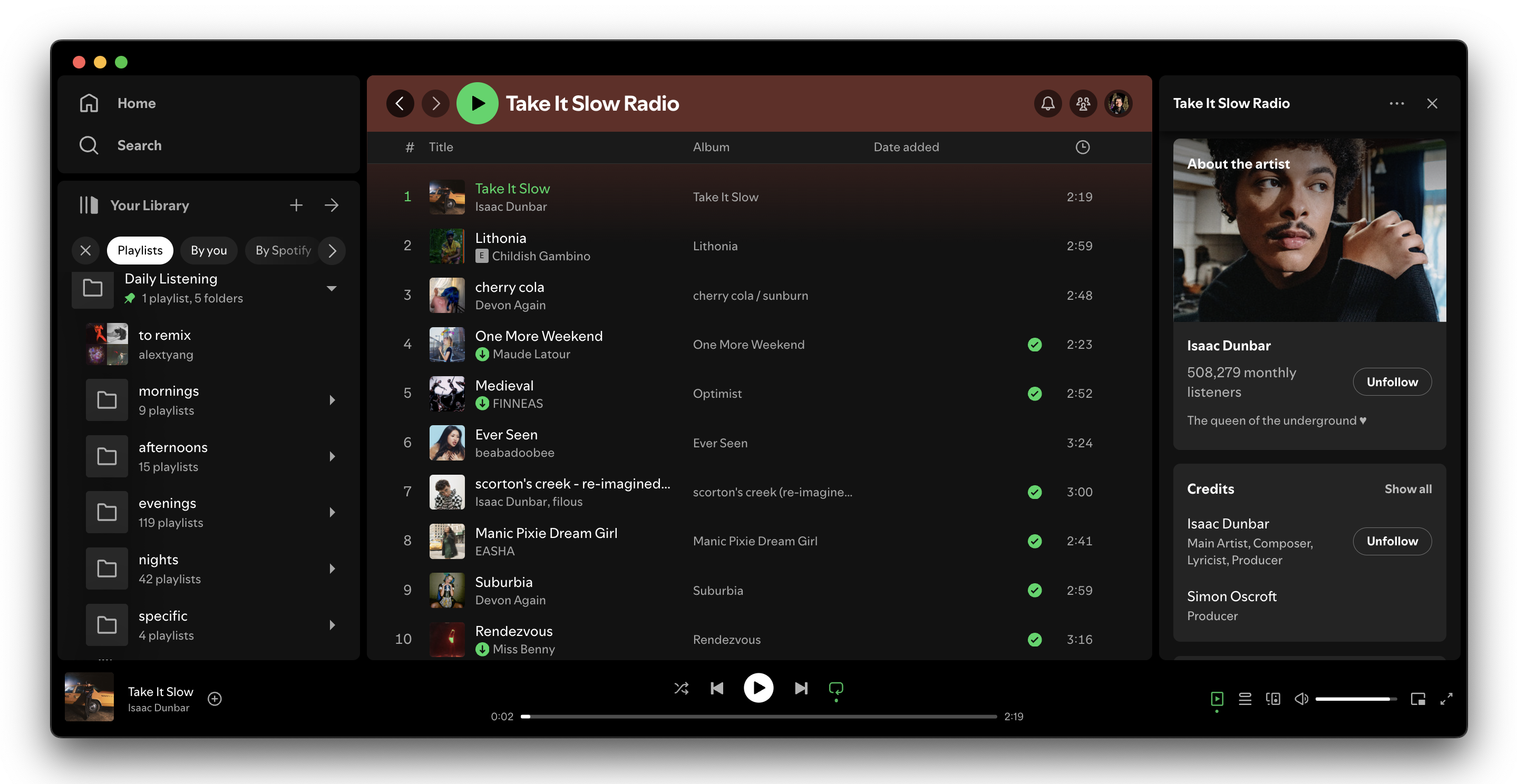

Designing a prototype to support the first round of features, I decided to align playback and library features to the Spotify design system as much as possible, in order to make the expansive features feel like a natural extension of the platform.

Finally, I dove into the technical implementation of the prototype, using the familiar React + Next.js tech stack from Accountable Brands. The three v1 features: Spotify sync, journalism aggregation, and smart summarization.

Currently, the app makes direct requests to Spotify to enable continuous granular updates, reduce sync latency, and minimize security vulnerabilities, but this may change if I need to implement a rate limiter.

For the corresponding data collection, requests are mediated by the server by API necessity. However, these progressive requests had to be broken up into chunks: search, article previews, and summarization, to prevent a timeout and give as much feedback as possible during lengthy fetch times.

An unexpectedly large challenge is continuously parsing the summarization as it's streamed in, especially as I wanted to identify contextually important items like other albums, sibling songs, etc.

After grabbing crucial track metadata, the app beings to fetch these larger contextual clues from Spotify in the background. When the summary begins to stream, I normalize the writing style, identify these phrases, and wrap them in type-based tooltip elements that contain built-in actions.

The result is a smarter summary, in a way that prompting couldn't achieve alone. As the app slowly expands its knowledge base on a song, the summary is retroactively enhanced to reflect these new insights.

Version 0.1.0

The baseline application.

Published now is a fully-functional proof of concept: Spotify sync (once approved), journalism aggregation, and smart summarization.

Playback

Controls, inspired by Spotify UI, are only possible once Spotify approves the app. For demonstration purposes, I substituted them for a search bar that uses Spotify's user-less API.

To keep up with external playback changes, it re-syncs whenever the page loses and re-gains focus. I found that a manual refresh button, in conjunction with this intelligent refreshing, was a viable alternative to constant background syncing.

Summarization

The star of the show: dynamic summaries try to capture the context, musicology, and critical response of the song. The prompt and format is a continual work in progress, as I improve accuracy and depth.

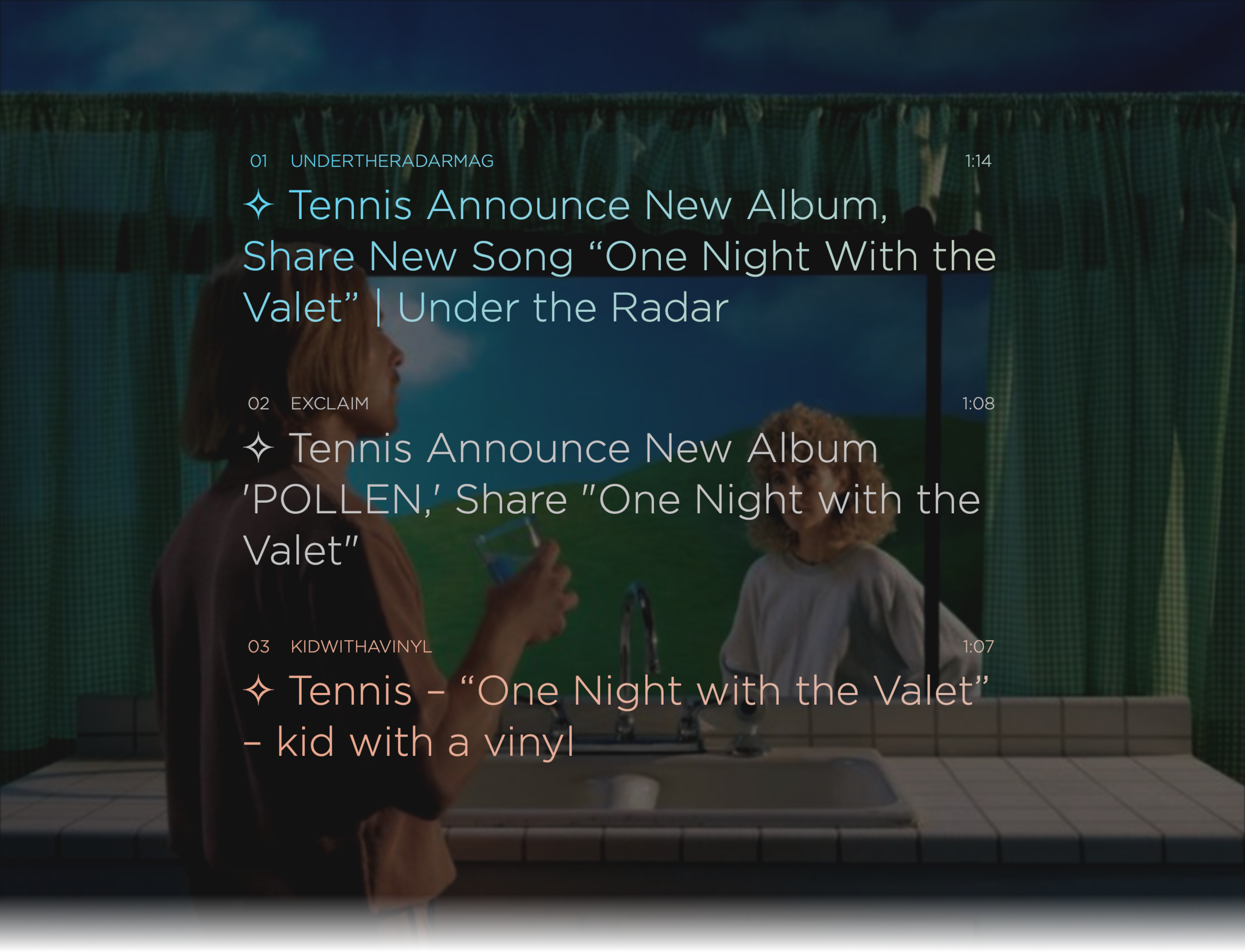

Journalism

The app uses a custom search engine to find music journalism about the song. Then, articles are sorted by level of relevance to the track and word count (converted to estimated reading time).

I restricted the use of external material to what I believe are exclusively transformative and referential purposes. While case law is still developing around this, I used Google's AI search summaries as a guideline for what is appropriate.

The current design is a significant departure from the prototype. I decided to emphasize the headlines as a distinction between original and external content. Ultimately, the intention of the summary is to refer users to the original content, not to replace it.